Is Everything Made of Information?

- Hermes Solenzol

- Nov 24, 2023

- 13 min read

Information is better than matter or mind as the fundament basic nature of reality

What is the ultimate nature of reality?

Can everything that exists be reduced to a single entity?

Since antiquity, philosophers thought so. There are three main ideas that keep resurfacing in different forms:

Materialism: Everything is made of matter. Since Einstein showed that E=m*c^2, we know that matter and energy can be converted into each other, so materialism needs to be restated as everything is made of matter-energy. A more developed version of materialism is naturalism: the idea that the world is solely made of matter, energy and the laws that govern them.

Idealism: Matter is just a screen, an illusion. Behind it, the world is made of ideas, or mind, or spirit. One version of idealism is pantheism, which sustains that God is in everything, or that the world is a part of God. Another variation of idealism that has recently come into vogue is panpsychism, that idea that everything is made of consciousness.

Dualism: The world is made of both matter and mind. This includes religions like Christianity, that believe that God made the material world but is separate from it. This entails a rejection of pantheism.

Here, I propose an alternative: that everything that exists can be reduced to information.

Everything is information.

Let’s call this idea the Information Paradigm.

Advantages of the Information Paradigm

With the advent of computers, the internet and the information age, everybody has become familiar with the concept of information. We buy information in the form of downloadable music, movies and books. We also pay for education, another form of information. In fact, money is just information, a bunch of numbers stored in the computers of banks. Cryptocurrency has made this even more real.

The Information Paradigm could be considered a form of Idealism. However, unlike mind, spirit or consciousness, information is quantifiable. While believing that mind is separate from matter leads to the problem of explaining how they interact, information is as physical as matter and energy. Physical entities like entropy and genes can be interpreted as information.

One of the problems with Materialism is that, if everything is matter/energy, it cannot explain the existence of the laws that govern it. Since these laws are information, Materialism is tacitly admitting that at least some information is part of the basic nature of reality.

Dualism has always been in trouble because it cannot explain how two completely different things—mind and matter—can interact with each other. Thus, if my mind is non-material and decides to move my hand, that non-material decision has to be transformed at some point into the energy of the action potentials in my nerves. But energy cannot arise from something non-material, because that would violate the principle of conservation of energy. However, if we understand both mind and matter as information, there is no problem explaining how they interact.

In fact, our minds are not the only non-material things. Books, songs, computer programs are also non-material objects. This was pointed out in the book The Self and Its Brain, co-written by the neuroscientist Sir John Eccles and the philosopher Karl Popper, in a last, brave attempt to defend Dualism. However, today we are all familiar with the idea that all these non-material objects are made of information. Indeed, we know how much information is in a song, a book, a movie or a computer program.

When applied to different sciences, the Information Paradigm is useful to conceptualize things and explain some notorious problems.

The challenge: defining information

But first we need to face the challenge of giving a definition of information that can be used as a unifying concept for everything that exists.

There are, indeed, many well-defined ideas of information, for example, in computer science and thermodynamics. Would it be possible to show that these different concepts all point to the same thing?

In the past, information was understood as something that was exchanged between people. However, modern ideas of information in science conceive it as something independent of humans, just like matter and energy do not need us to exist. Thus, we speak of the amount of information contained in human DNA or the fate of information inside a black hole. In our daily lives, we deal with information the same way we used to deal with energy and matter: as something that can be produced, stored and used.

There is an problem with defining information as a basic property of the world. The moment we propose that X is the stuff of which everything is made off, it becomes very difficult to define X because such definition would be in terms of something else. But since we propose that this “something else” is made of X, all such definitions tend to become circular.

All other ontologies have the same problem. It is hard to define ideas or spirit in Idealism, God in Pantheism and consciousness in Panpsychism.

Materialism seems to elude this problem because we have good definitions of matter and energy in physics. However, Materialism faces the problem of defining the nature of the laws of physics, which exist apart from matter and energy and control them.

That’s why some thinkers talk of Naturalism instead of Materialism. According to Naturalism, ultimate reality is matter/energy plus the laws of nature. Ironically, this seems to lead us back to a form of Dualism, since the laws of nature are distinct from matter/energy. But if matter/energy can be reduced to information, and the laws of nature are information, we are back to a single, unifying concept.

Properties of information

I think that soon physics will give us a rigorous definition of information that can be applied to computer science, thermodynamics, Quantum Mechanics, and all other sciences.

For now, instead of trying to define information, I will list some of its properties:

It is quantifiable.

It defines relationships or interactions between objects.

Unlike energy, the amount of information of a closed system can increase. This is not because information can come from nothing, but because information can produce more information.

The process by which information produces more information is an algorithm–something similar to a computer program. Nature is full of algorithms, which generate information following well-defined rules. Those rules are also information, and have been previously generated by other algorithms. I give examples below.

It takes energy to destroy information.

In thermodynamics, information is the same thing as entropy.

The laws of nature are information. Many of the laws of nature are emergent: they arose at some point in the history of the Universe. These emergent laws were created by algorithms that used previously existing laws.

Physics: measurements as transfer of information

The fact that Since information is quantifiable suggests, that there cannot be an infinite amount of information. To state it more formally, a closed system must have a finite amount of information.

Since the Universe is a closed system, it probably contains a finite amount of information.

This could explain some of the puzzles that we find in Quantum Mechanics. If the amount of information contained by an object is finite, aAs we move down to the sub-atomic level, we will find systems that contain very little amount of information.

Heisenberg’s Indetermination Principle could be explained by the fact that a moving particle contains a finite, and small, amount of information. So, iIf we ask it its speed, it cannot tell us its location, and vice versa.

The collapse of the wave function when we measure a quantum system could be explained by the fact that the measuring process transfers information from the macroscopic measuring system—which has lots of information—to the microscopic quantum system—which has so little information that it exist in an undefined state. This makes unnecessary explanations involving multiple universes or the involvement of consciousness at the quantum level.

The idea that Quantum Mechanics could be understood in terms of information was first proposed by physicist John Archibald Wheeler, with his famous phrase “it from bit”:

“It from bit. Otherwise put, every it — every particle, every field of force, even the space-time continuum itself — derives its function, its meaning, its very existence entirely — even if in some contexts indirectly — from the apparatus-elicited answers to yes-or-no questions, binary choices, bits. It from bit symbolizes the idea that every item of the physical world has at bottom — a very deep bottom, in most instances — an immaterial source and explanation; that which we call reality arises in the last analysis from the posing of yes-no questions and the registering of equipment-evoked responses; in short, that all things physical are information-theoretic in origin and that this is a participatory universe.”

The location of a single point in space-time cannot be measured with absolute precision, because this would require that space contains infinite information. This means that space-time must be granular, quantified. Indeed, there is a smallest unit of space, the Plank length, and of a smallest unit of time, the Plank time. We cannot define where a point is located with more precision than a Plank length. We cannot establish when something happened with more precision that a Plank time. Plank time is the ultimate present.

This granularity of space-time means that information cannot move at infinite speed. Somehow, this would create infinite amounts of information. Information can travel, at most, one Plank length in one Plank time. This is the speed of light, c.

Indeed, in the Theory of Relativity, it is not just light or matter which cannot travel at speeds faster than c. It is information itself.

Thermodynamics: information is entropy

Imagine a box full of black and white balls. If all the black balls are on the right and all the white balls on the left, the system contains little information. We can describe it as I did in the previous sentence. However, if we mixed all the balls, to describe the system we will need to specify the location of every black ball and every white ball. That would require lots of information.

In the first state, the system has little information and low entropy. In the second state, it has lots of information and high entropy.

In thermodynamics, information and entropy are the same thing.

If we want to store information in a system, we need it to have low entropy. In the first state of the black and white balls box that I described above, we could write words by putting some white balls between the black balls on the right, and some black balls among the white balls on the left. However, if all the balls are mixed up, we cannot write anything with them… Unless we expend a lot of energy to move enough balls to create a suitable background for the words.

If we tried to write too many words using the balls, we would end up making a mess and all the information we want to convey would get lost. There is a limit to how much information we can put on a system, which is defined by its entropy.

It works like this: a system with high entropy contains a lot of its own information, so it would not let us use it to store our own information. The ability of a system to store information depends on its size (total amount of information it can contain) and its entropy (how much of that information is already in use).

We are all familiar with that. The amount of information we can put in the hard drive of our computer depends on how big is the drive and how much information is already in it. If we want to make room for more information, we need to erase something. And to do that, we need to spend energy, because erasing something means decreasing the entropy of the system. This is called the Landauer’s principle.

When a system is complex enough, it becomes an algorithm that is able to increase its own information. In other words, it starts mixing itself up and increasing its entropy.

It is possible that this is what creates the arrow of time: the fact that time is asymmetrical and flows from the past to the future. There is no arrow of time at the quantum level, it only appears in macroscopic systems. This is a crucial issue because, without the arrow of time, it doesn’t make sense to speak of cause and effect.

Molecular Biology: life as information-processing

As I explain in my article The Secret of Life, the key concept to understand life is homeostasis. Homeostasis means the delicately maintained chemical balance in living beings, in which the concentrations of all the chemicals stay between narrow limits.

The chemical composition of non-living systems stays the same, either because there are no chemical reactions at all (as in a rock), or because all reactions are at chemical equilibrium. In contrast, in living organisms all compounds are constantly reacting with each other and these reactions are far from chemical equilibrium.

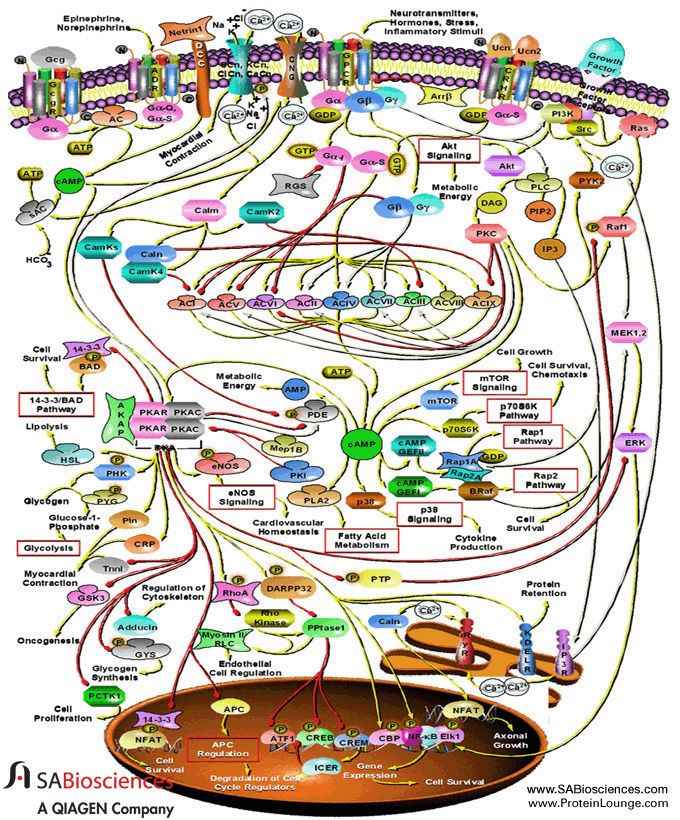

What maintains homeostasis in living beings is a network of signaling systems, mostly involving negative feedback. Any departure from homeostasis is corrected by triggering the opposite chemical reactions. Apart from negative feedback, the information contained in the DNA is extracted to direct the responses of the cell to changes in its environment.

In other words, life is an algorithm. An immensely complex one.

In 1943, the famous physicist Eric Schrödinger—one of the creators of Quantum Mechanics—had a radical insight about the nature of life, which he explained in a conference at Trinity College in Dublin, Ireland, that was transcribed into his famous book What Is Life?

Schrödinger proposed two revolutionary ideas.

The first is that living beings store information in the form of an “aperiodic crystal”—meaning a molecule in which some of the atoms are organized in different sequences encoding that information.

The second was that life could be understood in terms of entropy: a living organism keeps its low entropy state by absorbing energy from its environment and using it to pump entropy to the outside.

Schrödinger’s two insights in What Is Life? were confirmed experimentally.

His aperiodic crystal turned out to be DNA, whose structure was discovered 10 years later, in 1953, by Francis Crick, Rosalind Franklin, James Watson and Maurice Wilkins.

The idea that living beings are “dissipative structures” that use energy to pump out entropy and maintain a chemical balance far from chemical equilibrium was developed by Ilya Prigogine and published in 1955. He was influenced by Alan Turing, the inventor of information theory and computers. Prigogine was awarded the Nobel Prize in Chemistry in 1977 for this work.

Later work unraveled the subtleties of the genetic algorithm. DNA is transcribed into messenger RNA, which is then translated into the chain of amino acids of the proteins following the genetic code.

But this is only a small part of the story. Proteins are veritable nanomachines that perform most of the function of metabolism. The complexity of intracellular signaling pathways is still being unraveled in the fields of biochemistry and molecular biology.

Evolutionary Biology: evolution as an algorithm

Another way in which the concept of information processing can be used to explain life is understanding evolution as an algorithm.

This algorithm works like this:

Generate mutations in the DNA.

Translate these mutations into proteins, physiology and behavior.

Test these changes against the environment.

If they result in non-survival or decreased reproduction, discard the mutation.

If they result in survival and increased reproduction, keep the mutation.

Go to step 1.

Stuart Kauffman developed computer models of the evolution algorithms. In his view, living beings evolved by searching a state space of all the possible forms that they can adopt that are compatible with survival in a particular environment. Such search algorithm eventually fills all available niches in the environment.

The evolution algorithm is an emergent law. When life originated on Earth, primitive life forms only had tentative homeostasis. Living beings with tighter control over their metabolism survived those with more loose control. Reproduction arose as a more effective way to colonize the environment, displacing life forms that were unable to create offspring because they were not able to convey the information of their homeostasis beyond their own death.

Another big step was to transition from accidental mutation to directed mutation. Radiation and chemistry randomly alter the information in the DNA. Living beings that protected their DNA too much were eventually out-competed by living beings with more mutations, because they could evolve better adaptations to the environment. An uneasy equilibrium was established between the stasis derived from over-protecting DNA and the chaos produced by too many mutations in the DNA.

Eventually, some organisms found a clever solution: have too equal sets of DNA, so a mutation in one set could always be compensated by having the original gene in the other set. That way, they could have their cake and eat it, too. Lots of mutations combined with backup genes.

Even better: interchange these two sets of DNA with similar organisms to create offspring that was even better adapted. And, in the process, mix and match genes to increase the rate of mutation (meiosis).

Roll the dice more to win more often.

Generate more information, but keep previous information safeguarded.

Sexual reproduction had been invented.

Neuroscience: mind as an algorithm

The algorithm of mutation plus natural selection kept life going for millions of years. We could conceptualize this algorithm as a way to extract information from the environment. A mutation is matched with the environment to extract the result: adaptive or non-adaptive.

However, this is a very inefficient way to extract information from the environment.

Some animals evolved a better way: senses that allow them to rapidly change their metabolism according to changes in their environment. No gene translation was necessary. Changes in enzyme activity and in the membrane potential would suffice.

Eventually, sets of organs evolved to produce fast responses to challenges from the environment. The endocrine system, the immune system and the fastest of them all: the nervous system.

Before, information from the environment was stored only in the DNA. Now there was an additional information-storage system: neuronal memory.

Evolution kept rolling the dice. The information space of all possible shapes and functions was explored. A new niche was found: increase the memory storage and accelerate the processing speed of the nervous system.

Animals with larger and larger brains arose.

Culture as an algorithm

There was one caveat, however. Unlike the information in the DNA, information in the brain could not be passed to the offspring. It died with the individual.

But one animal species with a tremendously large brain found a solution: increase cooperation between members of its species by developing a language that conveys information between brains. That way, the learning of information can be sped up. We are no longer limited to the sensory experience of a single individual. And information can now be conveyed to the offspring, accumulating through generations.

Eventually, more efficient ways of storing and processing information were invented. Writing. Books. Mathematics. Science. Computers.

And that’s where we are.

A nested hierarchy of information systems

From the point of view of the Information Paradigm, everything that exists can be understood as a nested hierarchy of information systems.

Let me explain this cryptic statement.

One information system is the physical world of elementary particle, forces, planets, stars and galaxies.

On top of it, there is the chemical world of atoms and molecules.

On top of it, there is the biological world of cells, viruses, plants and animals.

On top of it, there is the psychological world of minds.

On top of it, there is the societal world of cultures, economics, arts and science.

It’s a hierarchical system because every step adds a new level of complexity to the previous one.

It’s nested because every step is built upon the previous one and cannot exist without the previous one. Each level encloses and supports the others, like the layers of an onion. That’s what I mean by nested.

I could add that these information systems are contingent, because the properties of the upper levels cannot be predicted from the properties of the lower levels. You cannot predict the properties of living systems from physics and chemistry. You cannot predict the properties of human cultures from biology.

I think that the Information Paradigm provides a perspective from which we can understand everything that exists.

It avoids the problems of naturalism of stepping from the material world to the world of mind and cultures.

It avoids the problem of dualism in explaining the interaction between matter and mind.

It could be considered a form of idealism analogous to panpsychism. However, where panpsychism proposed the existence of an ill-defined consciousness, the Information Paradigm is based on the idea of information, which can be defined and studied scientifically.

The Information paradigm has one unresolved problem, however. Does information exist in addition to matter and energy? Or could matter/energy be defined as forms of information?

Copyright 2023 Hermes Solenzol

Comments